Vuforia Spaces

Contextualizing real-time manufacturing data and machine issues in Spatial XR

PTC acquired Vuforia from Qualcomm in 2015, and was struggling to devise new uses for the technology beyond the Studio and Engine properties that came with the deal. They started a small R&D team to come up with some new concepts for spatial computing…

Excited by the technology and the team they had assembled thus far, I joined in early 2021 and we dove straight in to explorations with our key customer/partners to find gaps in the market for our product to tackle.

Roles:

Principal Product Designer

Lead User Researcher

Lean methods and mindset

-

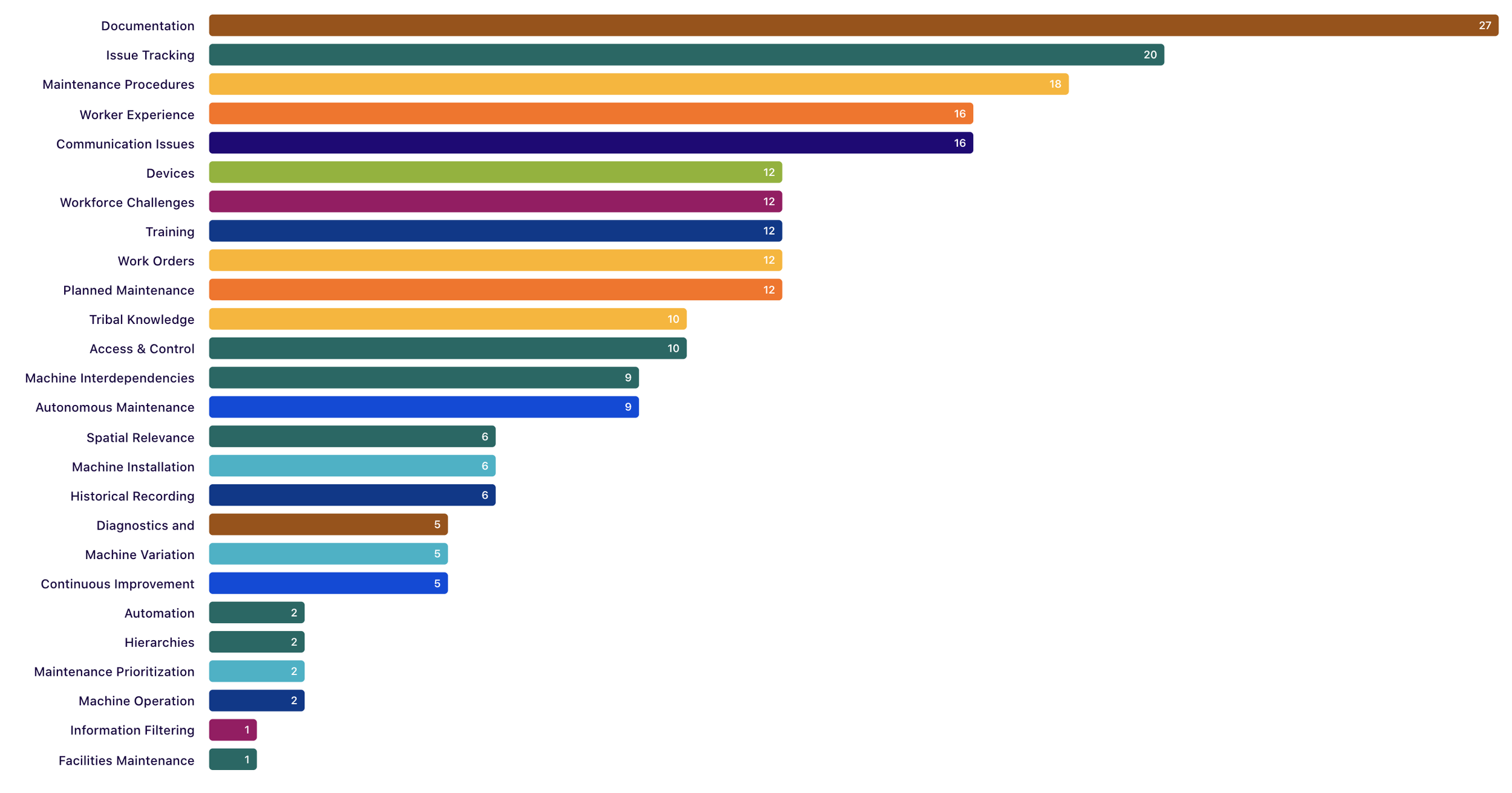

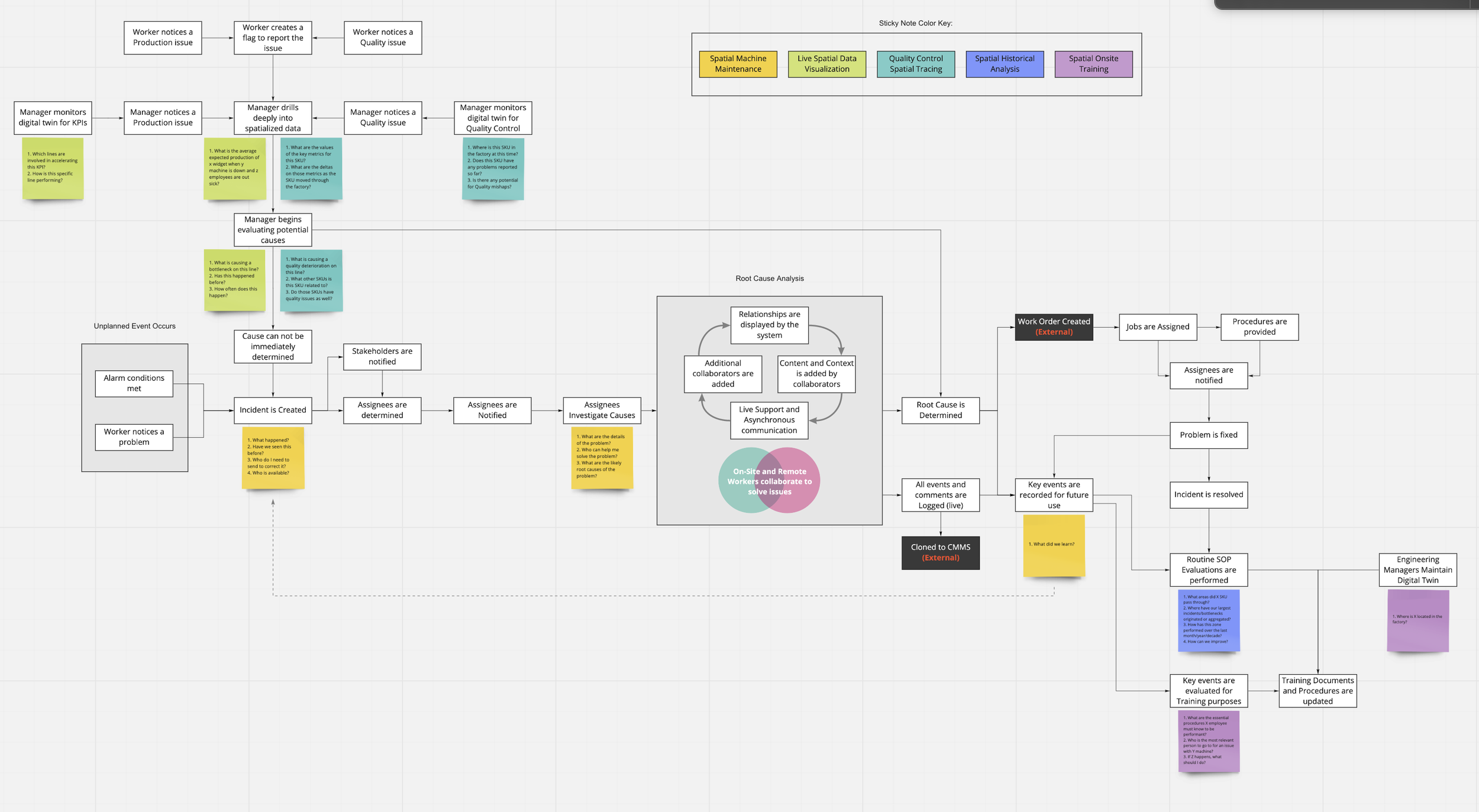

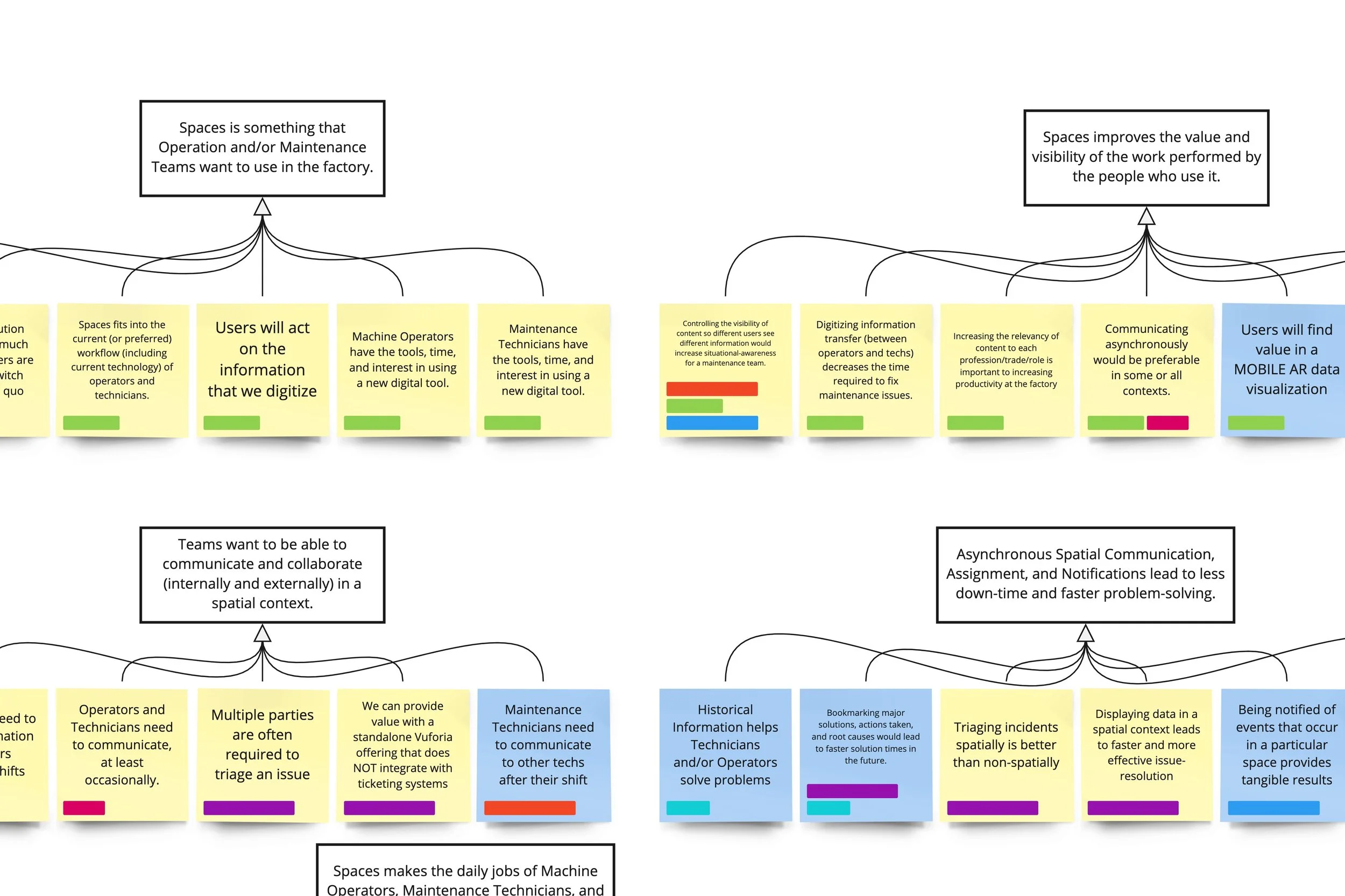

We narrowed our product focus based on the most tech-ready industries already easily accessible to PTC (Aerospace, Automotive, and Pharma), and met with our customers and users on-site, conducting interviews and running extensive design exercises. We recorded all insights and quotes gleaned from shadowing the potential end-users in their day-to-day tasks.

-

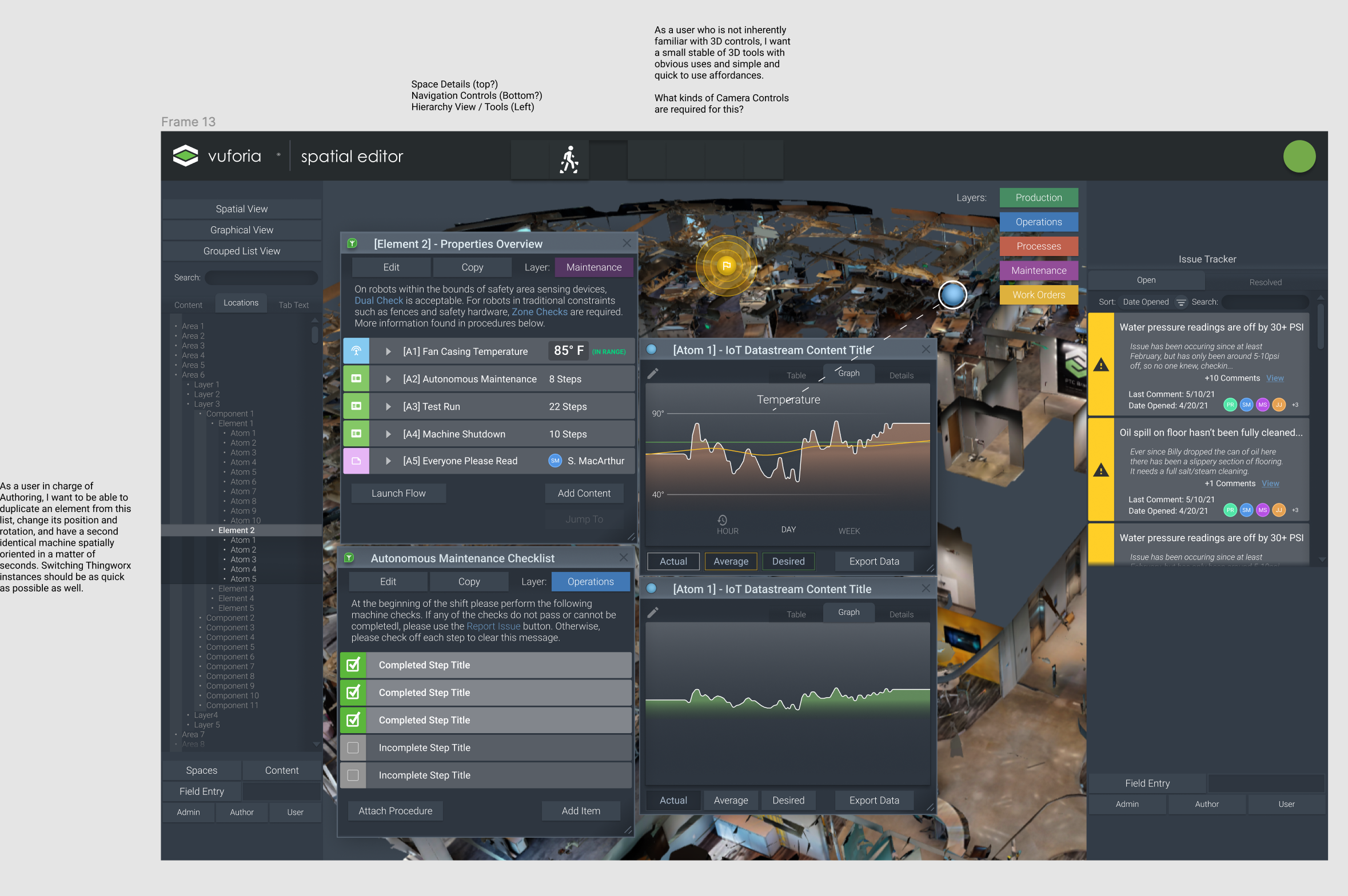

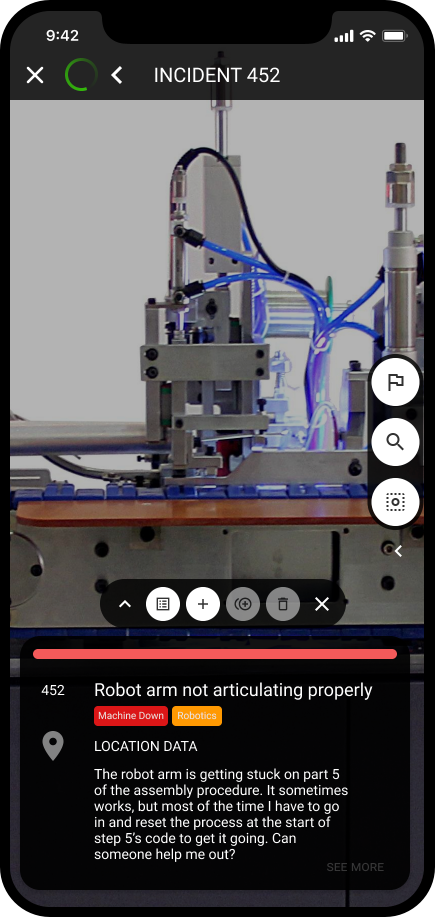

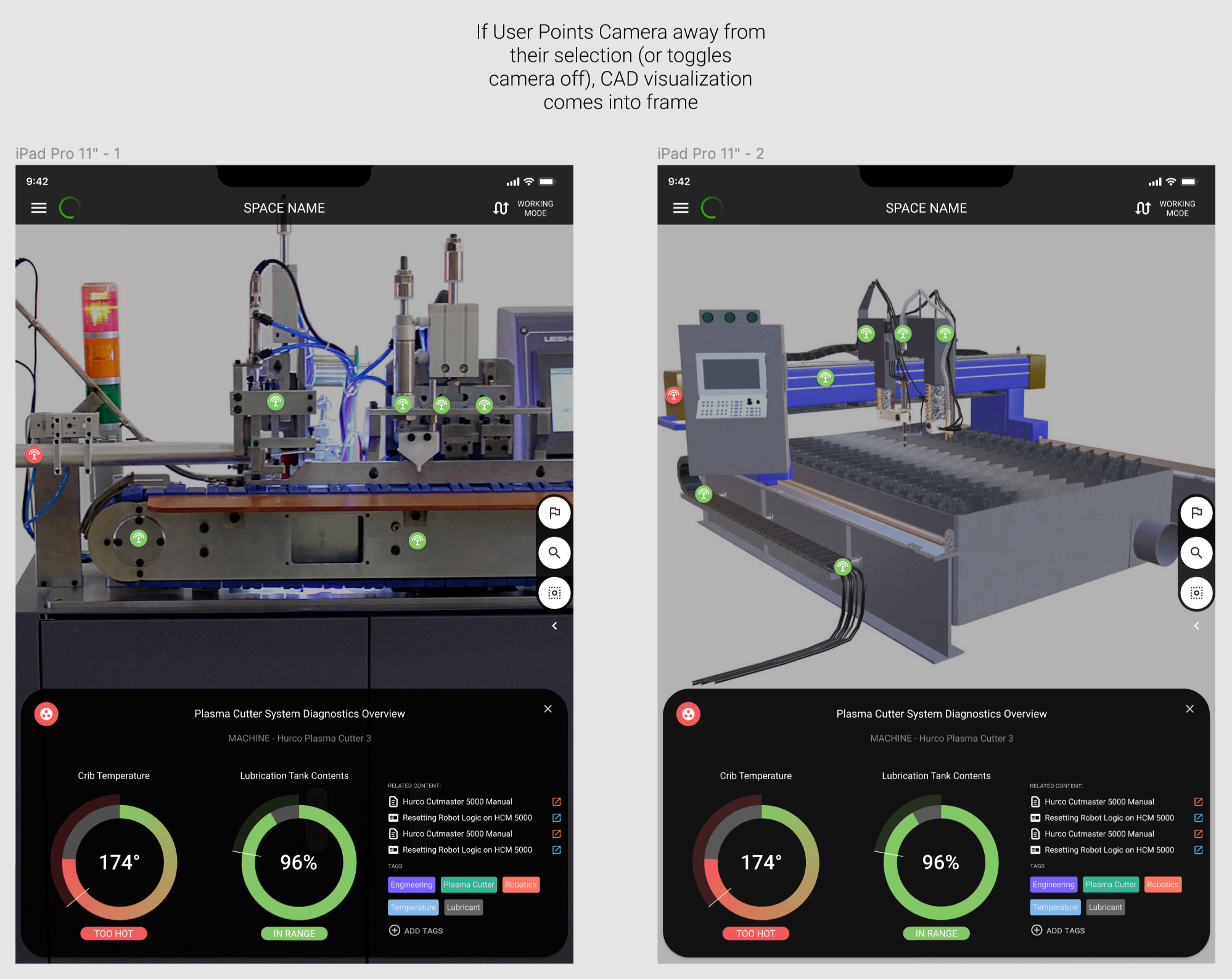

We immediately set up a low-fi spatial graph, allowing us to contextualize data based on location/node-relationships. Using quickly designed and implemented prototypes we were able to get a user-testable interface together right away, and get new and more refined versions in the hands of our interviewees each visit.

-

A benefit of having a new, micro R&D team in the Vuforia organization was that our colleagues from other teams would come to us with anything they had built and found no product for. We were able to pull developers from other projects for a week or two to implement something of use or get a new product integration in just ahead of its release.

-

My central role on the team was to push for constant desirability updates to incorporate the technology that existed but had yet to be designed to be user-facing. I split my design time between the desktop 3D/VR application and the mobile/HMD AR applications, and was in constant contact with our customers to deliver any relevant feature specs to my team as quickly as possible.

A Data-Driven Focus on User Needs

We were introducing new functionality weekly on a Spatial Editor that worked across desktop, mobile, and AR/VR devices.

With Azure spatial anchors and LIDAR scanning we could spin up an instance of our application for an organization on the first day of a workshop, and start experimenting almost immediately with their contextual needs. The next week we would introduce compatibility with Matterport scans, and they could upgrade their factory model while we built interfaces for them to import relevant content, from manuals and parts lists to work instructions and embedded web-apps.